Improving Performance of LLMs in Enterprise Insights Through Graph RAG

Large Language Models (LLMs) have quickly become central to enterprise strategies, supercharging innovation, efficiency, and customer experience. They’re versatile, bringing value to both customer-facing and internal operations. But as companies work to apply LLMs to enterprise data, they face two big hurdles:

- Hallucinations: LLMs can occasionally “hallucinate,” creating responses that sound plausible but are inaccurate or nonsensical. This risk makes companies cautious about relying on LLMs for critical data.

- Struggles with Structured Data: While LLMs excel with unstructured text, most enterprise data is structured, like tables or graphs, which LLMs typically can’t navigate as well.

To address these challenges, enterprises are exploring specialized Retrieval-Augmented Generation (RAG) approaches, such as Table Retrieval Augmented Generation (Table RAG) and Graphic Table Retrieval Augmented (Graph RAG). These methods allow LLMs to pull relevant, structured data into responses, making them more accurate and useful for specific use cases.

The App Orchid platform is taking RAG a step further for enterprises, with an approach fine-tuned to handle complex, structured data while ensuring high accuracy and efficiency. This approach helps companies leverage LLMs for deeper insights and informed decision-making without compromising reliability.

The Challenges

Hallucinations

Large Language Models are advanced AI systems that can generate human-like text. However, they can sometimes produce outputs that are factually incorrect or nonsensical, a phenomenon known as "hallucinations." These hallucinations can occur when the model tries to generate plausible-sounding information, even if it lacks the relevant knowledge or context. While LLMs have made significant strides in natural language processing, addressing hallucinations remains a key challenge in ensuring their reliability and trustworthiness.

To mitigate hallucinations in LLM outputs, techniques such as fact-checking, consistency checking, and leveraging external knowledge sources can be employed. Additionally, training LLMs on high-quality datasets and using appropriate evaluation metrics can help identify and reduce hallucinations.

Struggles with Structured Data

LLMs, while proficient in handling unstructured textual data, encounter difficulties when dealing with structured data like databases or large enterprise tables.

- Access and Integration: Integrating diverse and potentially large-scale external knowledge sources into the LLM's workflow can be complex and computationally expensive.

- Relationships and Dependencies: These models often struggle to understand the inherent relationships and dependencies within structured data, leading to inaccurate interpretations and misrepresentations.

- Format and Structure: Rigid format and schema of structured data can limit the LLM's ability to generalize and adapt to different query patterns

- Complexity: The sheer volume and complexity of enterprise data tables can overwhelm the LLM's processing capabilities, resulting in slower response times and potential errors.

Consequently, specialized techniques like Table Retrieval Augmented Generation are necessary to bridge this gap and enable LLMs to effectively leverage the valuable insights contained within structured data sources.

Specialized Techniques: Retrieval Augmented Generation

What is Retrieval Augmented Generation

Retrieval-Augmented Generation (RAG) is a framework that enhances the capabilities of LLMs by incorporating external knowledge sources during the text generation process. In RAG, the LLM doesn't solely rely on its internal knowledge but retrieves relevant information from external sources like databases, knowledge graphs, or documents. This retrieved information is then used to augment the LLM's context, enabling it to generate more accurate, informed, and contextually relevant responses.

RAG is the cheapest and most standard way to enhance LLMs with additional knowledge for the purposes of answering a question.

By leveraging external knowledge, RAG helps mitigate the limitations of LLMs, such as hallucinations and outdated information, while improving their overall performance and reliability.

What is Table Retrieval Augmented Generation

Table Retrieval Augmented Generation (Table RAG) is a specialized implementation of the RAG framework designed to enhance LLM interactions with tabular data. In Table RAG, the LLM retrieves relevant information from structured tables, such as databases or spreadsheets, to augment its knowledge and generate more accurate and informative responses to user queries.

This approach is particularly useful in scenarios where the user's questions require specific data points or calculations from tables, as it allows the LLM to access and leverage this information directly.

What is Graph Retrieval Augmented Generation

Graph Retrieval Augmented Generation (Graph RAG) is a specialized implementation of the RAG framework that leverages knowledge graphs as the external knowledge source. In Graph RAG, the LLM retrieves relevant information from the structured relationships and entities within a knowledge graph to enhance its understanding and generate more contextually accurate and informative responses. This approach is particularly effective in domains where complex relationships and interconnectedness between entities are crucial, such as knowledge-intensive fields like science, medicine, and law. By incorporating the rich semantic information encoded in knowledge graphs, Graph RAG enables LLMs to generate responses that demonstrate a deeper understanding of the subject matter and provide more comprehensive and insightful answers to user queries.

Questions Need Reliable Answers — Leveraging RAG in the Enterprise

Enterprise environments require the need to access data in multiple tables in a database. For this, here’s one approach to leveraging RAG to ensure a reliable response:

- Ask question

- Turn text prompt into SQL

- Query database to extract answer data

- Apply Table RAG on the answer data table

- Summarize and presents answer

By combining the language generation capabilities of LLMs with the structured knowledge stored in tables, Table RAG enables more precise and reliable responses, especially in domains like finance, healthcare, and customer service where tabular data is prevalent.

The App Orchid Approach to Applying RAG to Enterprise Data

The App Orchid platform is built specifically to handle enterprise data challenges, using large LLMs in innovative ways to boost both cost-efficiency and accuracy.

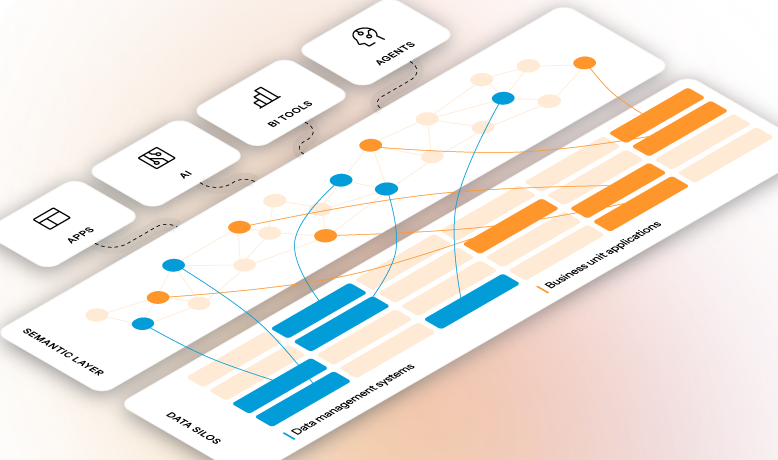

At the core of our approach is an ontology—a structured representation of enterprise data that includes:

- Key Values: Capturing the essential values from underlying data sources as nodes within a graph.

- Relationships: Mapping connections between data entities to create a contextual web of information.

- Contextual Metadata: Providing LLMs with explanations, such as what table headers mean, to improve data interpretation without exposing the data itself.

The LLM can read the relationships between the data, but not the data itself, which is important for data security and privacy. Our ontology also accesses multiple data sources and stores simultaneously for answers, saving data engineering time and costs.

When a question is asked, here’s how the App Orchid platform responds to a query:

- Question Parsing and Disambiguation: The platform parses the question and relies on the LLM for disambiguation.

- Graph Query Creation: The LLM constructs a graph query by identifying the necessary nodes (data objects) and edges (relationships) within the graph.

- SQL Generation: App Orchid then generates SQL queries for each database that needs to be accessed to retrieve the required data.

- Answer Dataset Formulation: The platform compiles the retrieved data into an answer dataset, formatted and ready for enterprise reporting, analytics and more.

The output data is designed for seamless integration into visualizations, analytics, and AI as well as LLM operations like summarization or TableRAG. The App Orchid platform provides tools to automate visualizations, deliver advanced analytics, and offer real-time AI model inference for users.

The Best Path to

AI-Ready Data

Experience a future where data and employees interact seamlessly, with App Orchid.